One important benefit of Bayesian statistics is that you can

provide relative support for the null hypothesis. When the null hypothesis is

true, p-values will forever randomly

wander between 0 and 1, but a Bayes factor has consistency (Rouder,

Speckman, Sun, Morey, & Iverson, 2009), which means that as the

sample size increases, the Bayes Factor will tell you which of two hypotheses

has received more support.

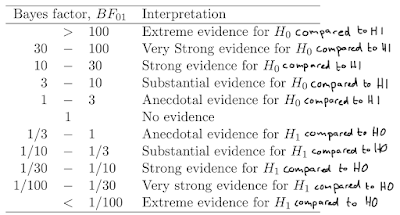

Bayes Factors can express relative evidence for the null hypothesis (H0) compared

to the alternative hypothesis (H1), referred to as a BF01, or relative evidence for H1 compared to H0 (a BF10).

A BF01 > 3 is sometimes referred to as substantial evidence for

H0 (see Wagenmakers,

Wetzels, Borsboom, & Van Der Maas, 2011), but what they really mean is

substantial evidence for H0 compared to

H1.

Table 1 from

Wagenmakers et al., 2011, #fixedthatforyou

Since a Bayes Factor provides relative support for one over another hypothesis, the

choice for an alternative hypothesis is important. In Neyman-Pearson Frequentist approaches, researchers have to specify the alternative hypothesis

to be able to calculate the power of their test. For example, let’s assume a

researcher no idea what to expect, and decided to use the average effect size

in psychology of d = 0.43 as the

expected effect when the alternative hypothesis is true. It then becomes easy

to calculate that you need 115 participants in each group of your experiment,

if you plan to perform a two-sided independent t-test with an alpha of 0.05, and you want to have 90% power.

When calculating the Bayes Factor, the alternative is

specified through the r-scale. This is a scale for the Cauchy prior, which is

chosen in such a way that the researcher expects there is a 50% chance of

observing an absolute effect larger than the scale value chosen. As Rouder and

colleagues say: “A well-known and attractive alternative to placing priors on

the mean µ is to place them on effect size, where effect size is denoted by δ

and given as δ = µ/σ. Thus, the researcher above would choose an r-scale of

0.43.

The default Bayesian t-test

originally used a r-scale of 1 (a very large effect), but the updated default

test uses a r-scale of 0.707. This means that whenever you perform a study

where you calculate the default Bayes Factor, and find a BF10 < 1/3,

you have observed support for the null-hypothesis, relative to an alternative

hypothesis of an effect of d = 0.707.

Let’s assume the default Bayesian

t-test answers a question

you are interested in, and you want to know whether your data is stronger

support for a

d = 0 compared to a

d = 0.707. You know that to have 90% power to

observe a

d = 0.707 in an independent

t-test,

you need 44 participants in each condition, and you have heard people recommend

to use at least 50 participants in each condition, so you set out to collect 50 participants in each condition. You

are also completely fine with a null-result: You have submitted your study to

a journal that accepts pre-registered reports, and the reviewers there agreed the

data will be interesting whether they support the null hypothesis or the

alternative hypothesis. What is the probability you will observe support for H0

relative to H1 (see

this paper by Felix Schonbrodt and colleagues for similar Frequentist calculations of Bayes Factors)?

There are no Bayesian power calculators (yet, as far as I know). Bayes Factors

express the relative evidence in your data, and you don’t need a threshold to

interpret a Bayes Factor. In principle, any BF

10 < 1 provides

stronger support for the null hypothesis than for the alternative hypothesis. But

no one will be convinced if you argue for the null hypothesis based on a BF

10

= 0.98, and it’s practical to have some sort of agreed upon threshold where we

all agree data can be interpreted as ‘support’ – hence tables with Bayes Factor

thresholds as the one reported in Wagenmakers et al, 2011 (see above). So what are the chances

of actually observing relative support for the null hypothesis, compare to an

alternative hypothesis of

d = 0.707, with 50 participants in each condition,

when you want a

BF10 < 1/3?

Using the R code below, you can easily calculate this

probability yourself. It also provides the probability of finding support for

the alternative hypothesis. This means you can run the simulations twice, once

setting the true effect size to 0, once setting the true effect size to 0.707,

and see whether your sample size is large enough to get what you want from the

data you plan to collect.Note you need quite some simulations to be accurate, and this takes minutes)

Running these simulations, we see there’s about a 69%

probability of finding support for the null-hypothesis with a BF

10

< 1/3 if the true effect size is 0. In the histogram of Bayes Factors below, this is the distribution to the left of the left vertical red line. Approximately 1.6% of the Bayes Factors give misleading evidence (a BF > 3, to the right of the right vertical red line) and around 30% of the Bayes Factors are inconclusive evidence.

The second set of simulations reveals there’s approximately a 85% chance of finding a

BF

10 > 3 when the true effect size is 0.707. Based on these data,

and your explicit interest in providing support for the null-hypothesis, you

might want to collect some more participants.

Let’s look at some other scenarios. Imagine that in the

previous situation, the true effect size was d = 0.43. It turns out that 13% of

our experiments will find relative support for the null-hypothesis, 38% of the

tests will find relative support for the alternative hypothesis, and 48% of the

tests will be inconclusive, with a Bayes Factor between 1/3 and 3. Luckily,

with Bayesian statistics you can continue collecting data, but since no one has

unlimited resources, it’s important to know whether it’s feasible to collect

sufficient data to answer your question in the first place. For example, in the default Bayesian t-test, don’t bother trying to find ‘strong

evidence’ (BF < 1/10) for the null if you are planning to collect less than 200 participants. When the null is really true, and you plan

to collect data from 100 to 200 participants in each condition, you’ll never find the evidence you are looking

for. With 300 participants in each condition, you’ll start to get around 33%

probability of finding what you want, indicating a threshold of 10 might be unreasonable

for single studies, and more suitable for meta-analyses.

The simulations also show that the probability of an

incorrect conclusion (observing support for H0 when H1 is true, or support for

H1 when H0 is true) is very low. Indeed, it’s so low, that we might want to

reconsider used a Bayes Factor of 3 as a threshold.

For example, if we set a threshold as low as 1.2, instead of

3, the simulations show that, when the null-hypothesis is true, approximately 5% of

the Bayes Factors we observe will provide relative support for the alternative

hypothesis, and over 90% of the Bayes Factors will provide relative support for

the null hypothesis, with 50 participants in each condition (the remainder, 5% fall in the small inconclusive evidence area). This may not be ‘substantial

evidence’ but you won’t be wrong very often, in the long run, when you decide

to accept the null hypothesis over the alternative hypothesis of d = 0.707 when

you collect 50 participants in each condition.

You may not like the use of a cut-off value to indicate ‘relative

support’ as proposed by some Bayesians (Dienes,

2016; Wagenmakers et al., 2011). I think these cut-offs are

most important when planning an experiment. It makes sense to aim for support most

people will find convincing. When the data are in hand, you can interpret it

any way you like. If you agree, then important questions are which cut-off

values are acceptable under which circumstances? Can we develop formulas for

Bayesian power analysis instead of having to rely on simulations?

I learned something by playing around with these simulations,

and I expect others moving to Bayes Factors will have similar questions as I tried to examine here. Power analysis is Frequentist concept, and it might not be close to the heart of Bayesians, but I expect it meets a future need of researchers

switching to Bayesian statistics, especially when they want to design studies

that provide support for the null.

Dienes, Z.

(2016). How Bayes factors change scientific practice. Journal of

Mathematical Psychology. http://doi.org/10.1016/j.jmp.2015.10.003

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., & Iverson,

G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic Bulletin & Review, 16(2), 225–237.

http://doi.org/10.3758/PBR.16.2.225

Wagenmakers, E.-J.,

Wetzels, R., Borsboom, D., & Van Der Maas, H. L. (2011). Why

psychologists must change the way they analyze their data: the case of psi:

comment on Bem (2011). Retrieved from

http://psycnet.apa.org/journals/psp/100/3/426/